API Rate Limiting

What is API rate limiting?

As API developers, you need to make sure your APIs are running as efficiently as possible. Otherwise everyone using your API will suffer from slow performance.

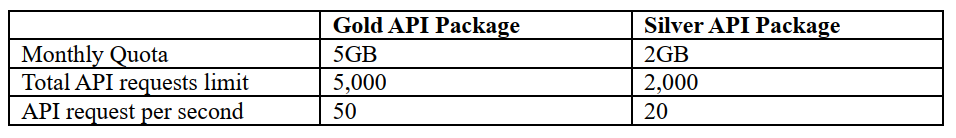

API rate limiting is to enforce a limit on the number of requests being made in a certain timeframe (like 20 API request per second), or the quantity of data clients can consume (like 5GB per month).

Why API rate limiting matters

API rate limiting is an essential component of Internet security, as DoS attacks can tank a server with unlimited API requests. API rate limiting also helps make your API scalable. If your API blows up in popularity, there can be unexpected spikes in traffic, causing severe performance issue.

Where to do API rate limiting

If API rate limiting is implemented in application itself, that means application, upon receiving a request, checks with a service or a data source to figure out whether or not this request should be fulfilled or not based on user-defined rate limit. The result is to extra resource burden on the backend applications.

Decouple API rate limiting from API is critical for API architecture.

AppScaler, as a load balancer, is designed connections much more efficiently and hence ideal place to implement API rate limiting as API proxy. You could offload the API rate limiting burden from backend applications to AppScaler with performance and agility if you want to change the rate limiting logic in the future.

API rate limiting Example

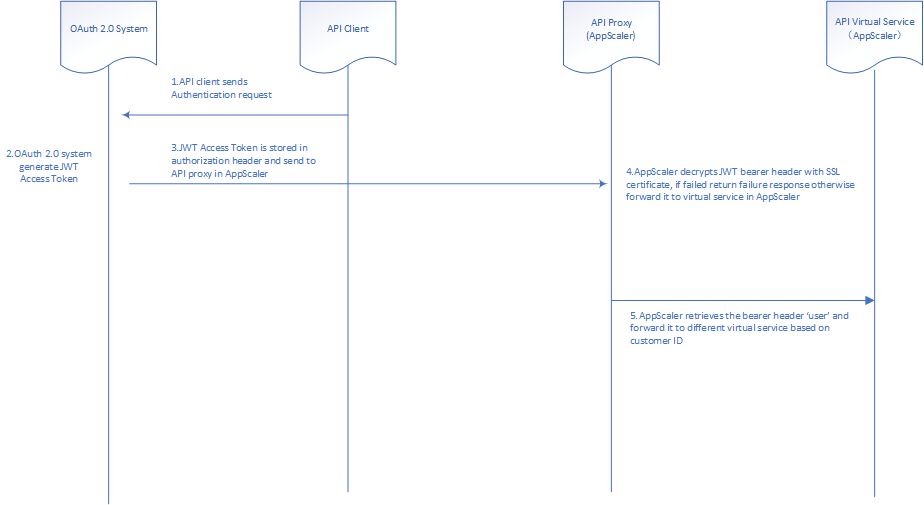

- The RESTful API is authenticated in OAuth 2.0

- The OAuth JWT is encrypted with SSL certificate

- The customer ID is stored in bearer header ‘User’

- The API rate limiting service package as below

Please click here to view the implementation details